It is usually stated that substantial language versions (LLMs) along the lines of OpenAI’s ChatGPT are a black box, and definitely, there is some truth to that. Even for info scientists, it’s difficult to know why, normally, a design responds in the way it does, like inventing details out of entire fabric.

In an work to peel back the layers of LLMs, OpenAI is creating a tool to automatically determine which pieces of an LLM are responsible for which of its behaviors. The engineers behind it anxiety that it’s in the early stages, but the code to operate it is out there in open resource on GitHub as of this early morning.

“We’re attempting to [develop ways to] anticipate what the challenges with an AI procedure will be,” William Saunders, the interpretability crew manager at OpenAI, explained to TechCrunch in a mobile phone job interview. “We want to genuinely be able to know that we can rely on what the design is executing and the solution that it provides.”

To that close, OpenAI’s tool works by using a language design (ironically) to figure out the capabilities of the components of other, architecturally more simple LLMs — specifically OpenAI’s individual GPT-2.

OpenAI’s software makes an attempt to simulate the behaviors of neurons in an LLM.

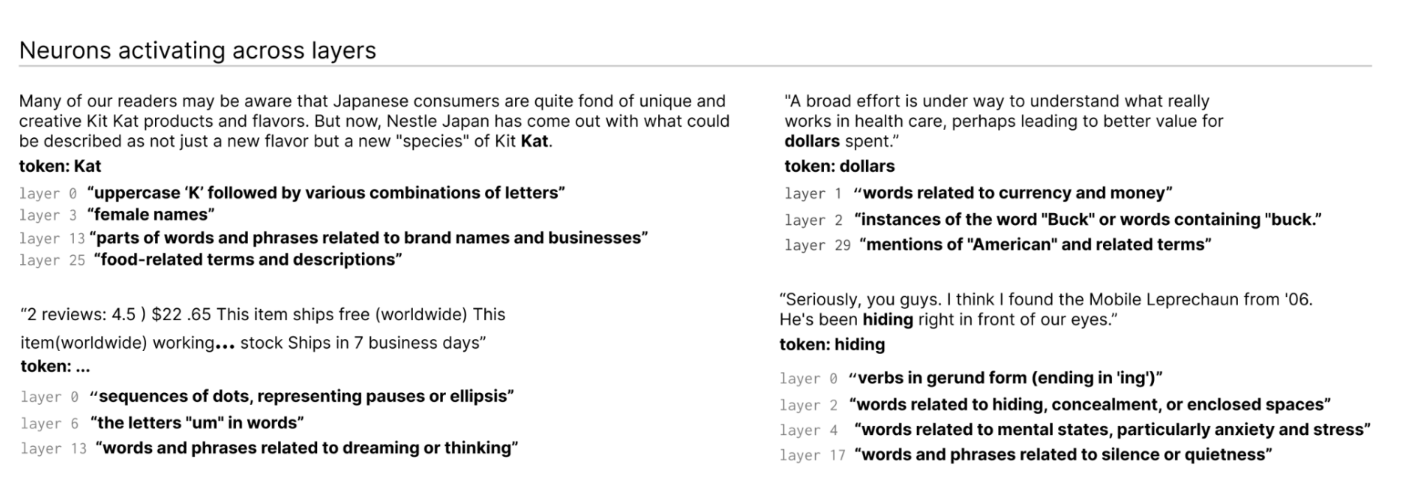

How? Initial, a brief explainer on LLMs for track record. Like the brain, they are made up of “neurons,” which observe some precise pattern in textual content to affect what the total product “says” next. For case in point, presented a prompt about superheros (e.g. “Which superheros have the most practical superpowers?”), a “Marvel superhero neuron” could possibly enhance the chance the product names certain superheroes from Marvel movies.

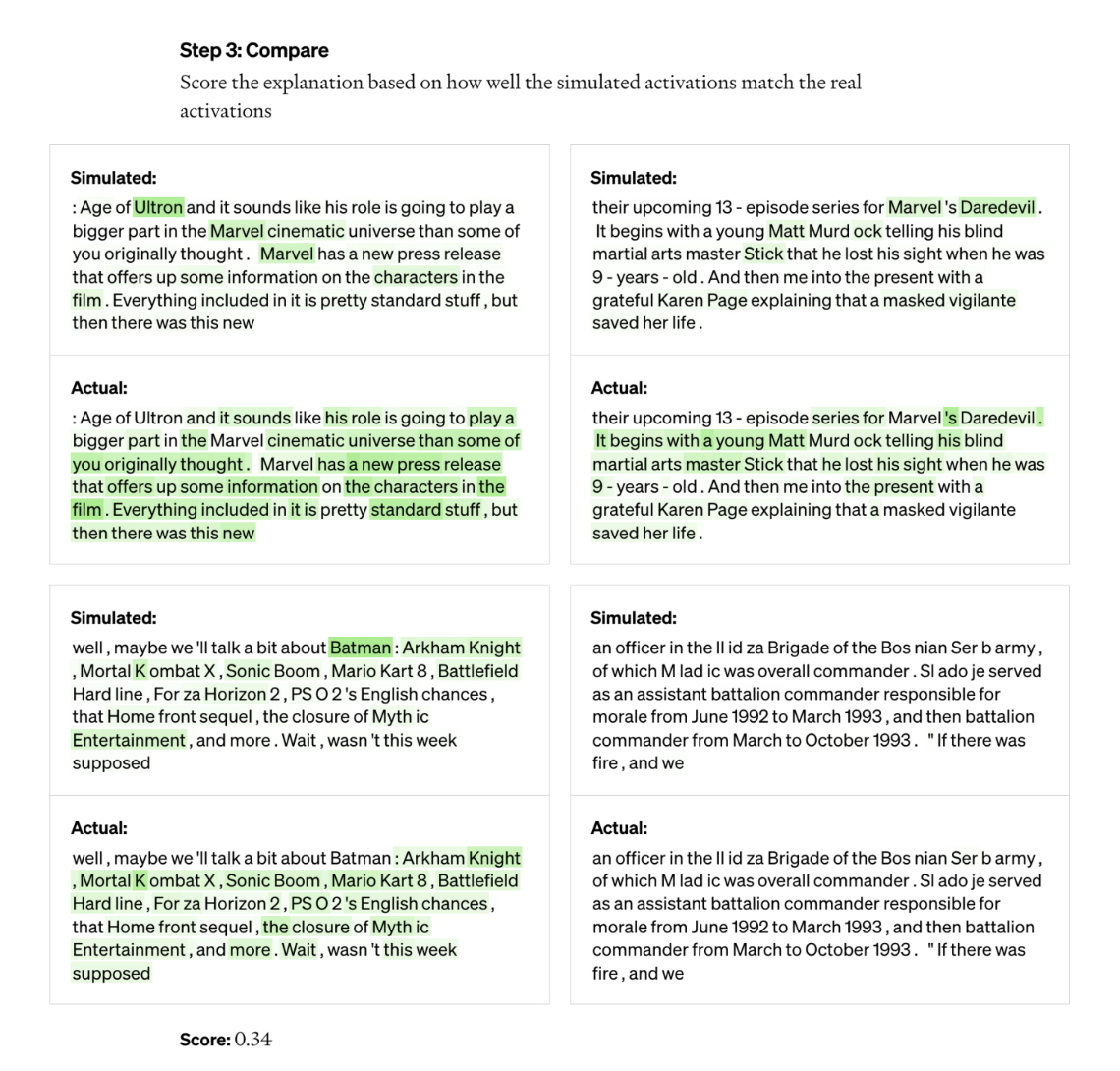

OpenAI’s instrument exploits this set up to split designs down into their specific parts. Initially, the instrument operates textual content sequences by means of the design becoming evaluated and waits for situations where a particular neuron “activates” often. Up coming, it “shows” GPT-4, OpenAI’s most recent textual content-building AI model, these very energetic neurons and has GPT-4 produce an explanation. To determine how precise the explanation is, the device delivers GPT-4 with textual content sequences and has it predict, or simulate, how the neuron would behave. In then compares the behavior of the simulated neuron with the habits of the actual neuron.

“Using this methodology, we can in essence, for each one neuron, arrive up with some variety of preliminary pure language clarification for what it is undertaking and also have a rating for how how properly that rationalization matches the real behavior,” Jeff Wu, who potential customers the scalable alignment workforce at OpenAI, explained. “We’re utilizing GPT-4 as aspect of the process to develop explanations of what a neuron is searching for and then rating how properly people explanations match the truth of what it’s undertaking.”

The researchers have been in a position to produce explanations for all 307,200 neurons in GPT-2, which they compiled in a knowledge set that’s been unveiled along with the software code.

Instruments like this could 1 working day be used to increase an LLM’s performance, the researchers say — for instance to slash down on bias or toxicity. But they admit that it has a prolonged way to go prior to it is genuinely helpful. The device was self-assured in its explanations for about 1,000 of those people neurons, a modest fraction of the total.

A cynical person could argue, too, that the instrument is basically an advertisement for GPT-4, given that it requires GPT-4 to function. Other LLM interpretability instruments are fewer dependent on commercial APIs, like DeepMind’s Tracr, a compiler that translates systems into neural network versions.

Wu said that is not the case — the fact the tool makes use of GPT-4 is merely “incidental” — and, on the contrary, shows GPT-4’s weaknesses in this space. He also mentioned it wasn’t created with commercial applications in brain and, in concept, could be adapted to use LLMs aside from GPT-4.

The software identifies neurons activating throughout layers in the LLM.

“Most of the explanations rating rather poorly or don’t demonstrate that a great deal of the actions of the genuine neuron,” Wu explained. “A whole lot of the neurons, for case in point, energetic in a way wherever it is really difficult to inform what is going on — like they activate on five or 6 distinctive things, but there is no discernible pattern. In some cases there is a discernible sample, but GPT-4 is unable to discover it.”

That’s to say very little of much more complex, more recent and larger styles, or models that can browse the world wide web for info. But on that second point, Wu thinks that web searching would not change the tool’s underlying mechanisms considerably. It could simply be tweaked, he suggests, to figure out why neurons choose to make particular research motor queries or accessibility certain internet sites.

“We hope that this will open up up a promising avenue to deal with interpretability in an automatic way that other folks can develop on and lead to,” Wu stated. “The hope is that we seriously truly have excellent explanations of not just not just what neurons are responding to but all round, the conduct of these products — what types of circuits they are computing and how certain neurons have an effect on other neurons.”